In the year since we announced the Drift Automation bot in September 2019, we’ve shared success stories about what our bot can do. But until now, we haven’t told you HOW we do this. With the award of Patent #10,818,293, titled Selecting a response in a multi-turn interaction between a user and a conversational bot, we can now give you a peek under the hood, and explain some of our secret sauce 🤖

Fluid Context Switching

Put simply, our bot is different. Bots on the market today primarily come in two flavors – those that follow a rigid script or tree to ask prospects a series of questions, and reactive bots that wait for the prospect to ask a question.

Drift’s bot, on the other hand, is more flexible, fluidly mixing proactive lead qualification with reactive answers to prospects’ questions. We enable nimble context switching to give a prospect the opportunity to qualify a vendor, while the vendor’s bot qualifies the lead.

Let’s illustrate this with an example from authentication and identity provider Okta.

Figure 1: An Automation AI Bot transcript where the bot seamlessly handles the prospect switching contexts.

In Figure 1, at first the prospect plays along, answering the bot’s questions for a couple turns. But then, it happens – the bot pops the question, “Want to speak to someone to figure out how Okta can make your tech stack work better for you?” The prospect realizes he is not ready to commit. He has questions of his own. So he interrupts the flow, and expresses several thoughts spanning five sentences. Drift AI gracefully answers the user’s question, and ushers the qualified lead into a live chat with the sales team.

The Architecture of a Bot

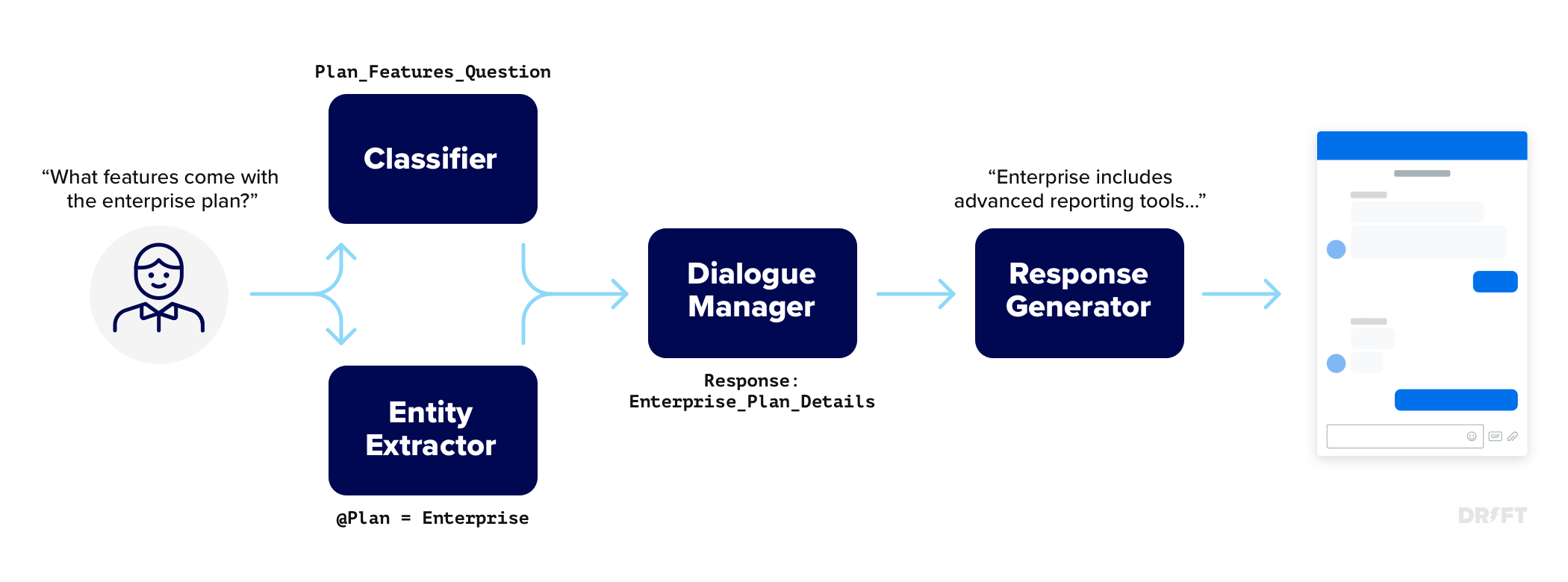

Smooth context switching like this is made possible by Drift’s patented approach to dialogue management, based on events. Before we dive into dialogue management, let’s first take a step back and talk about bot architectures, and where dialogue management fits into the big picture. In general, all AI bots look like Figure 2, with components for: user input text classification, entity extraction, dialogue management, and response generation.

Figure 2: Typical architecture of a chatbot

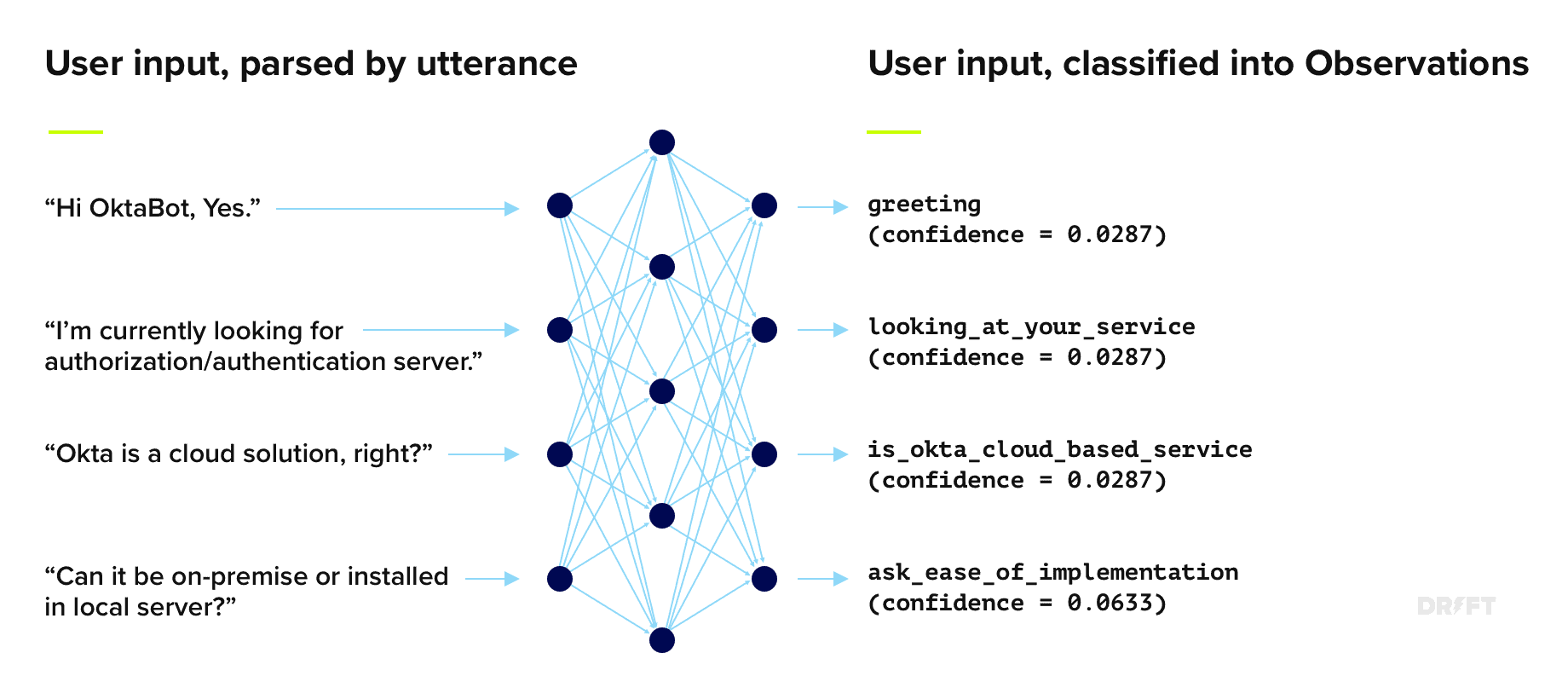

In this post, we are focusing on the Dialogue Manager, which receives critical input from the Classifier. The Classifier is the module responsible for taking a string of words, and assigning a label that categorizes the meaning of the input, as seen below in Figure 3. Typically, classifiers return multiple possible labels for each input, ranked by a confidence score. Our confidence scores in Figure 3 might look small, but this is because they represent a ranking among about 150 possible labels per input. If the confidence score really is too low, the bot will ask the user for clarification. We hold our bots to high standards, and invest in cutting edge approaches throughout all components of our conversational AI system. For example, our classifier uses Google’s BERT neural language model, fine-tuned on 80 million Drift chat messages, to maximize the accuracy of understanding exactly what users are trying to say to our Automation bots.

Figure 3: Classifying user input into Observations.

Drift’s architecture looks like Figure 2, where we laid out the basic component of an AI chatbot, but it is the innards of the Dialogue Manager where we diverge from the norm. The Dialogue Manager is the component that selects a bot response based on the semantic interpretation of the user’s input. This is a hard problem, especially when trying to converse coherently in a multi-turn dialogue that could last several minutes. Context and previous dialogue history matter when selecting what to say next, and real human conversation is messy with context changing all the time, without warning.

The Problem with Intents

Nearly every other AI-powered bot on the market takes an “intent” driven approach to dialogue management. The bot classifies the input text to detect the user’s intent, and then activates a related context to guide its responses. Typically, the activated context guides the conversation with a prescribed decision tree, or a set of slots that the bot aims to fill by peppering the user with questions. The problem with this approach is that the response logic is constrained to thinking about one context at a time. While it is not impossible for these intent-driven systems to monitor for inputs that require the bot to escape the current context, it is difficult to execute well, because it requires designers to anticipate every possible interaction between contexts.

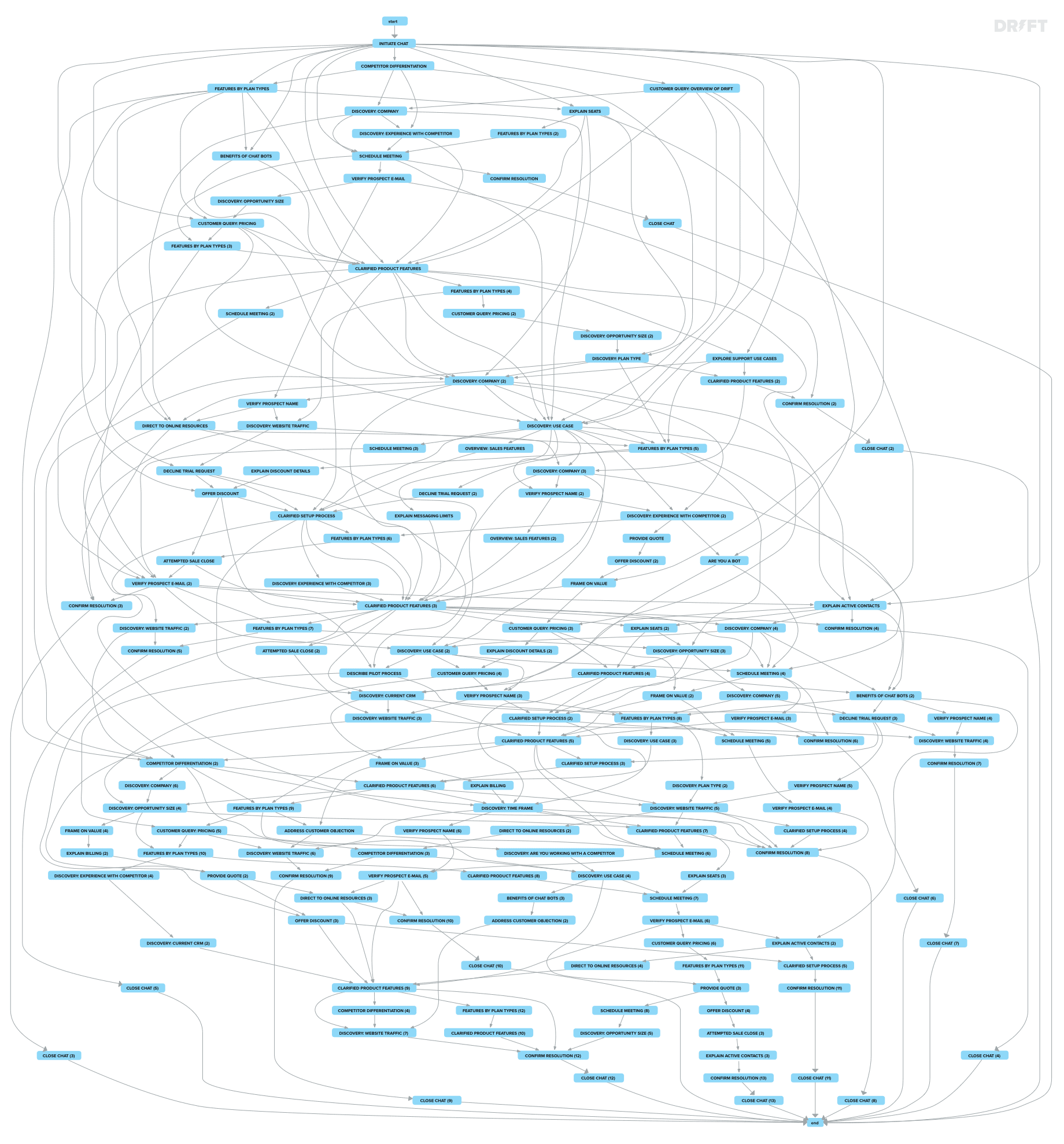

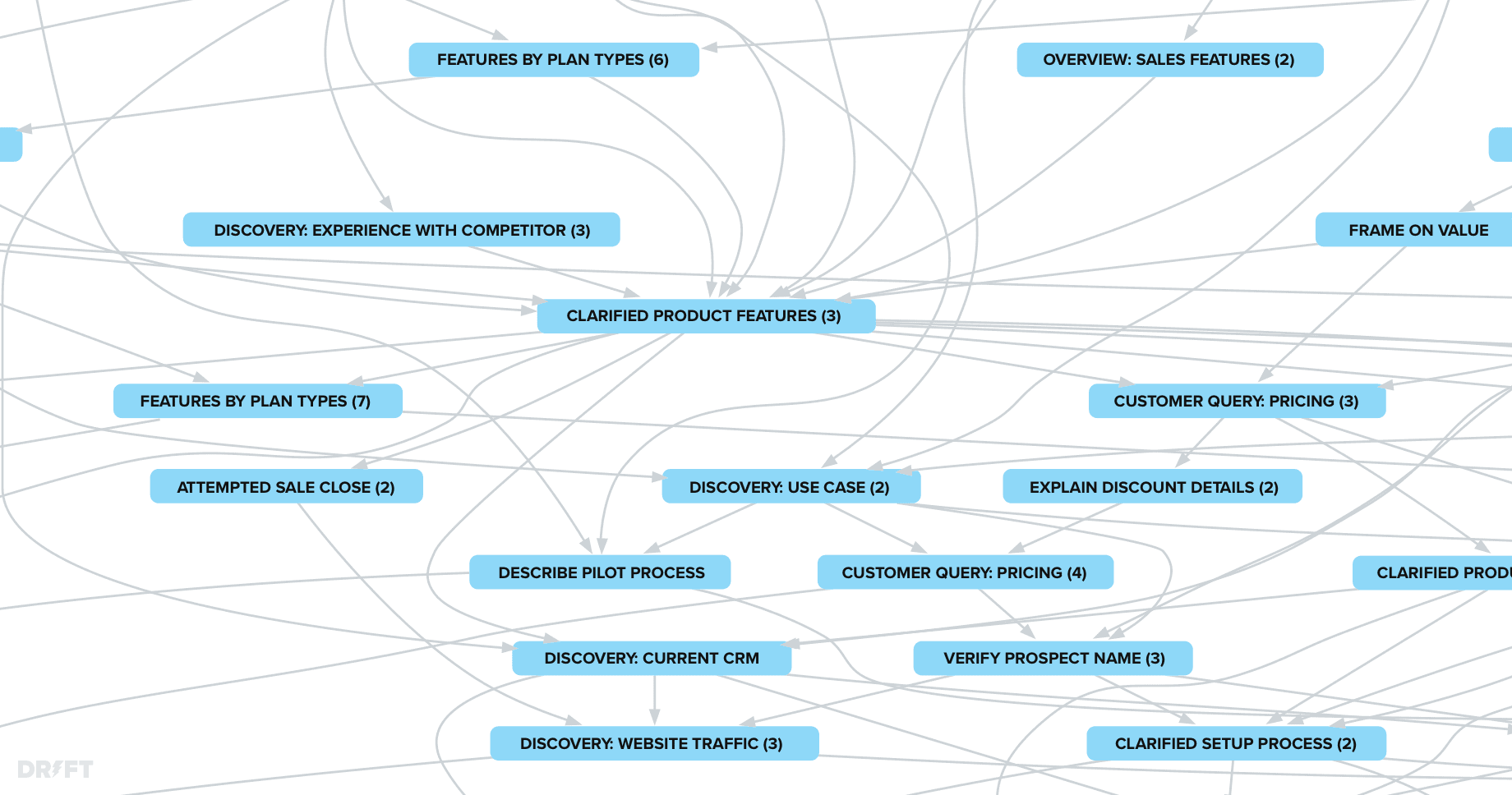

Figure 4, seen below, visualizes the sequences of topics observed in 100 conversations with Drift sales reps. Here we can see why supporting flexible context switching is so critically important to delivering a human-like conversational user experience. Real conversations are messy, and nuanced. Every path is different. Further down, Figure 5 zooms in to show that many conversations hit the same topics, just in a different order. Or users jump back and forth, revisiting the same topic with further questions.

Figure 4: Conversational paths observed in 100 Drift chat conversations between humans.

Figure 5: A zoomed-in section of Figure 4.

Events, Not Intents

With Drift’s technology, however, we take an entirely different approach, based on “events” rather than intents. Every time the user types text or clicks a button, they generate a new observation. With each observation received, our AI either appends an existing event with the new observation, or starts a new event with the observation. There is no limit to the number of events, and any event can be extended at any time. This gives our bots a lot of flexibility to fluidly switch contexts–- to a new context, or to return to one previously in progress.

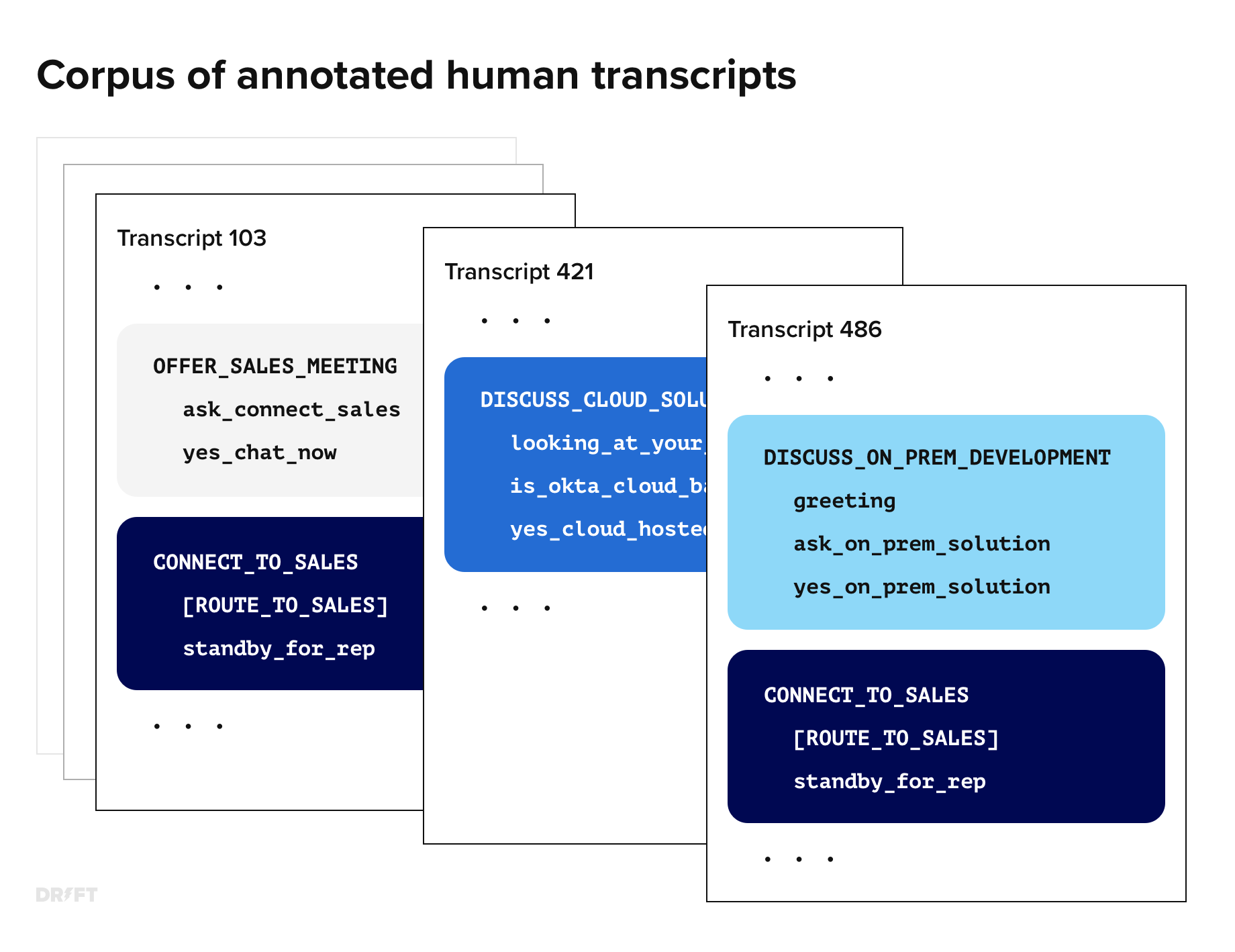

The challenge is knowing which event to extend, if any, or whether a new event should begin. There is often a lot of ambiguity in this decision. We resolve this by comparing the events we are forming in real-time to a corpus of conversation transcripts that have been annotated with the same set of observation and event labels. This approach can be described as reasoning by analogy. Our bot decides how to put observations into context, and how to select a coherent response, by comparing to event annotations in transcripts that we have seen in the past.

Figure 6: Corpus of human-human chat transcripts, annotated with Observations and Events.

Bot University: Where AI Learns to Converse

It may sound like a lot of work to annotate transcripts, and indeed it is. But don’t worry, we have your back. Each Drift Automation customer organization is paired with one of our AI Conversation Designers (AICDs), who is an expert in our data annotation pipeline, called Bot University – where our bots are schooled in understanding our customers’ conversations. Our AICDs use Bot U to mine human-to-human chat transcripts to learn qualification criteria, how to answer questions, and how conversations move from event to event. Once a bot goes live, AICDs also review the human-bot transcripts to score accuracy, correct errors, and add fresh training data on a regular basis.

Figure 7: Reviewing a human-bot transcript to score accuracy in Bot University.

Responsible AI, with Humans in the Loop

Our bots respond by querying what was said next in the most similar context in our database of annotated transcripts. It’s important to note that each bot is powered by a database of annotated transcripts specific to that bot’s associated customer organization. Bots will only respond with something that the company’s sales reps have said before, or something that has been authored by one of our designers and vetted by the customer organization for their bot. With this human-in-the-loop approach, there is no risk of a bot saying something it learned on the internet, like, say GPT-3.

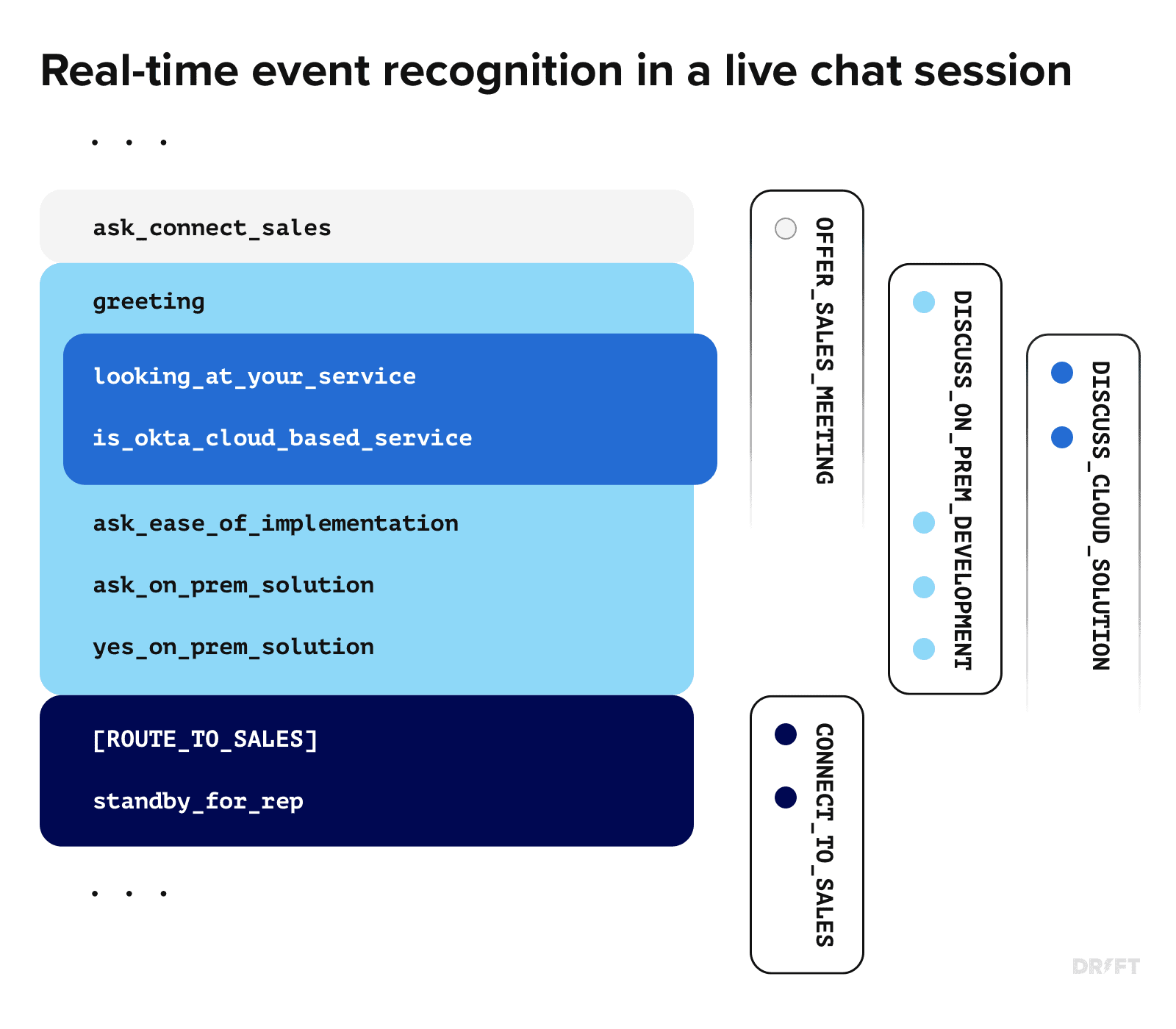

Figure 8: Selecting a response by recognizing events in real-time, leveraging Observations classified in figure 3.

The Infinite Variability of Language

Figure 8, above, illustrates how Drift AI manages multiple active events at once. Those who look closely at Figure 8 might notice a couple things. First, there are a couple events that never get completed (OFFER_SALES_MEETING, and DISCUSS_CLOUD_SOLUTION). It is legal in our approach to start an event, and switch contexts to another event at any time. Those incomplete events remain active, in case the user wishes to return to them in a future turn. Second, the example of DISCUSS_ON_PREM_DEPLOYMENT in Figure 8 is not an exact match of the pattern for the same event in Figure 6 – the example in Figure 8 contains four utterances rather than three. We have two mechanisms that give us this flexibility: event expressions and event aliasing. In our corpus of annotated transcripts, we capture many variations of the same event, referred to as event expressions. Due to the infinite variability of language, we will never capture every possible event expression. Event aliasing is a solution to address this challenge by labeling events in a live session using the closest match we’ve seen for a sequence in the annotated transcripts, when we cannot find an exact match. Once a sequence is aliased, downstream processing can’t tell the difference between aliased and exact matches, allowing dialogue management and response generation to proceed based on a simplified model that does precisely match examples in our annotated corpus.

We hope this article shed some light on how our bots are empowered with advanced conversational capabilities that delight our customers. We’ll be sharing much more about Drift’s patented AI in future posts. Stay tuned.